Now a days there is a lot of demand for AWS and of course it

is easy to adopt AWS services like EC2, S3, etc., but not all the time we may

able to deploy new instances and install applications, there may be some scenarios

where we may need to migrate complete VM from physical datacenter to AWS. So

here we will discuss about how we can do cold migration of VM to AWS.

VM Import/Export enables us to import virtual machine (VM)

images from our existing virtualization environment to Amazon EC2, and then deploy

new instance using same AMI, this will enable us to copy our VM

image catalog to Amazon EC2, or create a repository of VM images for backup and

disaster recovery.

Below Software’s /applications will be used to complete this

cold migration:

- VMware Workstation

- Ovftool

Step 1: Setup a VM

Install a windows 2012 r2 server

using VMWare Workstation using the windows 2012 r2 ISO.

Step 2: Exporting the VM

We need to export this VM as OVA file or VMDK,

VHD OR RAW. So, power off the VM and

Go to -> File -> Export to OVF.

Now the VM is exported in the format of OVF.

Step 3: Conversion of OVF to OVA

The OVF file should be converted to OVA file to

import the VM in AWS. VMware player helps to convert OVF to OVA,

After installation of ovftool, run the below commands

cd "Program Files\VMware\VMware

OVF Tool"

ovftool.exe H:\Image\Windows-2012-Server.ovf

H:\Image\Windows-2012-Server.ova (This command converts from .ovf to .ova)

Now the VM is successfully converted from OVF to

OVA.

Step 4: Exporting OVA to

S3

For exporting this OVA to S3, we need to have a

user and an IAM role in AWS.

And also install AWS CLI for 64bit.

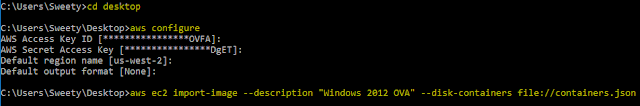

After installing AWS CLI, run the commands using

command prompt.

aws configure (Give the access key , secret key

and region)

aws s3 ls

aws s3 cp Windows-2012-Server.ova

s3://migratebucket/ (Cpoying the ova file to s3 bucket)

aws s3 ls s3://migratebucket/ (To view the

uploaded ova in s3)

Step 5: Importing OVA

To import OVA as an image, there are few steps

that needed to be followed.

1. Open

a notepad and type the below commands and save it as trust-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": { "Service": "vmie.amazonaws.com" },

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals":{

"sts:Externalid": "vmimport"

}

}

}

]

}

As an aws user, run the command

aws iam create-role --role-name vmimport

--assume-role-policy-document file://trust-policy.json (Need to go the path

where the trust-policy.json is saved)

2. Type the below commands in a notepad

and name it as role-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::disk-image-file-bucket"

]

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::disk-image-file-bucket/*"

]

},

{

"Effect": "Allow",

"Action":[

"ec2:ModifySnapshotAttribute",

"ec2:CopySnapshot",

"ec2:RegisterImage",

"ec2:Describe*"

],

"Resource": "*"

}

]

}

disk-image-file-bucket

-> implies the bucket name

Now run the command

aws iam put-role-policy --role-name vmimport --policy-name vmimport --policy-document file://role-policy.json

3. Finally

importing the VM- Open a notepad and type the below commands and save this

fie as containers.json file.

[

{

"Description":

"Windows 2008 OVA",

"Format": "ova",

"UserBucket": {

"S3Bucket": "my-import-bucket",

"S3Key": "vms/my-windows-2008-vm.ova"

}

}]

S3Bucket -> It indicates the bucket name

S3Key -> It is the path of the ova file in S3 (Make this file public

and copy that location).

aws ec2

import-image --description "Windows 2012 OVA" --disk-containers

file://containers.json

(This command imports the image in reference with containers.json file).

The

ova is imported and it is pending state.

Step 6:

Check the Import task

To check the status of the

Import,

aws ec2 describe-import-image-tasks --import-task-ids

import-ami-ffrnccwy

(we get the AMI id from import task id

from the containers.json policy)

After

multiple checks, the status of the import shows "Completed"

Finally,

the AMI is created in AWS and now that we have an AMI, we can launch it as an

instance or copy it to another region.

Step 7: Verifying the VM Imported as AMI.

Launch an instance using the AMI (Image of the VM) which is

created in our AWS EC2 environment. Connect to the instance using Remote

desktop connection and give the password which we have set it during the

installation of the VM in VMware workstation.

Hence, the VM is imported successfully with the migration of

the applications 😊

Note: This process will help us to perform VM cold migration, if somebody want to perform physical server, then process would remain same apart from converting Physical to VM, In future post will convert how to convert physical machine to VM.

Hope this post will help you.